I’m sure there are many blogs about home labs and set ups. They all pretty much look the same except, each one has a base-level set up and a set of deviations.

My setup is almost the same but, where my deviations come in are tied to what I’m attempting to do.

Back-story later, lab config now:

- 3 x NUC Hades Canyon NUC8i7HVK models

- Each NUC has an Intel(R) Core(TM) i7–8809G CPU @ 3.10GHz (approximately 37GHz of processing power)

- Each NUC has 64GB of DDR4 RAM (Corsair Vengeance), total 192GB RAM

- All NUCs have Dual 1Gbps NICs

- All NUCs have 2 x 500GB SSDs (non-RAID setup, more on this in another post)

- Single Cisco SG350–10 port L3-Switch gigabit switch (Yes, the lack of redundancy is painful)

- NUCs are running vSphere 6.7 managed by vCenter. I chose vSphere since it’s a commonly used hypervisor, well known and, supported by the vCommunity.

- I have deployed a Windows 2016 Server to support DHCP, DNS, and AD. DNS is extremely important for vCenter and vSphere in general. DNSMASQ and BIND were the original DNS servers but, painful management drove me to something simpler.

- 1 x QNAP TS-228A, with two hard drive bays, and one 1Gbps NIC. I loaded this up with 2x2TB SSDs in RAID1, and served up NFS exports to my vSphere cluster, to serve as a shared datastore. Yes, there is a bottleneck here.

- Power-line Ethernet (more in another post)

- Other components

The above set up is supposed to represent my “mini-datacenter”, where I can effectively deploy a variety of publicly available apps, or build my own.

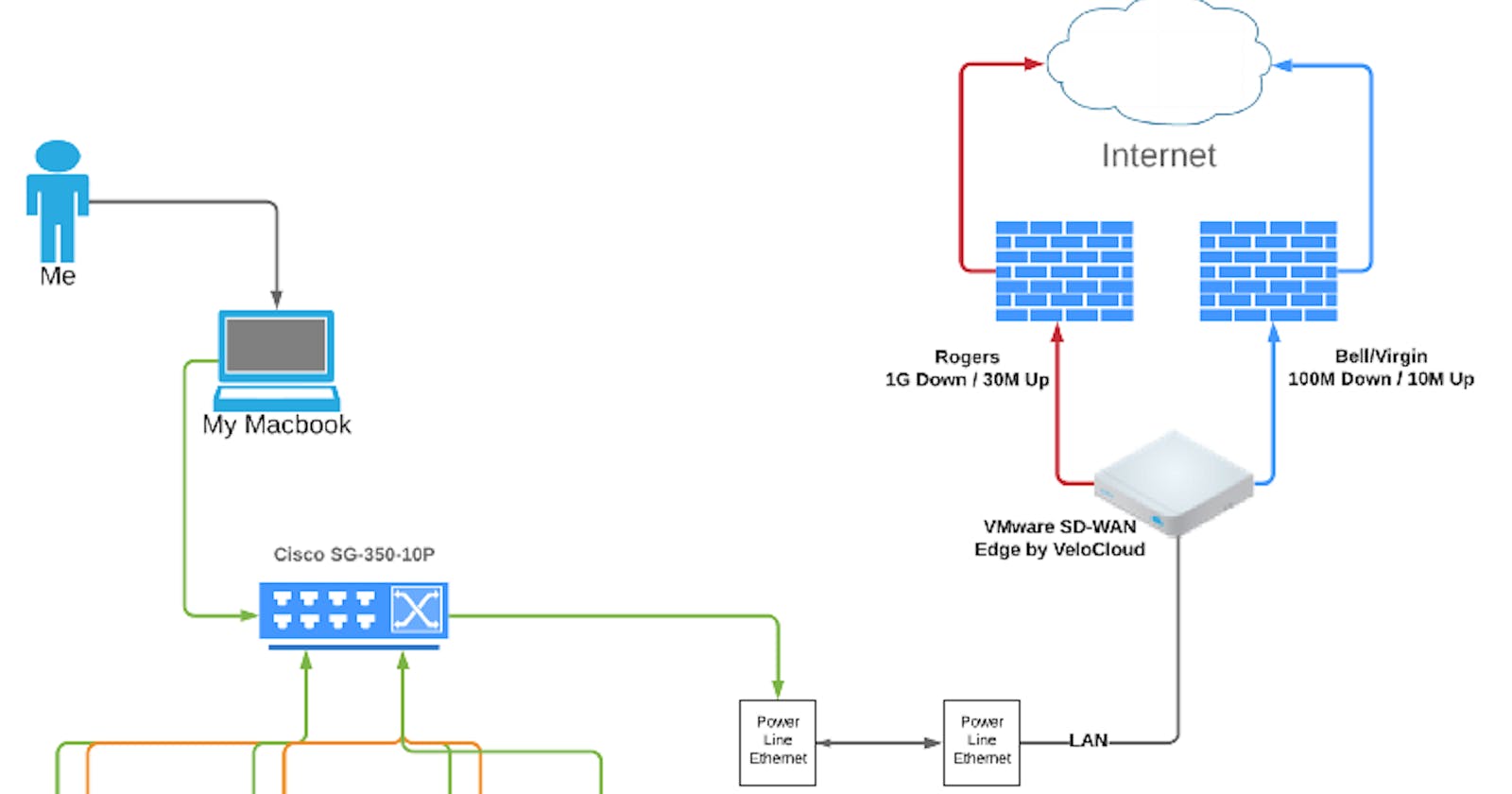

And for those curious about the topology:

With respect to Networking

I called out using a Cisco L3 SMB switch mainly because of cost, and ease-of-use and deployment. There are definitely other options out there but this will suffice for my lab needs.

I aimed to simply the network configuration and leverage as flat of a network as possible. Flat network = a single /24 to handle management connectivity and some other things (vMotion, NFS). The other reason for taking this approach is eventually slap on NSX-T. As I go along I’ll update my networking to support various other services and provide more network real estate to apps.

For those unaware, NSX-T is a software-defined hybrid-multi-cloud application platform providing distributed virtualized networking and security services.

With NSX-T, I allow my applications/VMs to run on top of software based virtual networks. This allows me to spin up network prefixes with associated gateways that are distributed, without ever changing the underlying infrastructure. I can do this in a highly repeatable fashion, using automation. Simply put this is achieved using overlay technologies, and distributed routing/firewalling. “Just-in-time Network and Security” is what I like to call it.

With NSX-T, I can support my immediate DC networking needs within my virtualized environment.

While NSX-T provides L3VPN, IPSec VPN, and L2VPN services, these have generally been used to connect a few branch sites or cloud locations. While it does provide what I need for a few branches, it’s highly manual to set up, and not scalable for my needs (and laziness).

How am I addressing connectivity outside my min-DC? Enter SD-WAN

No history about SD-WAN, sorry. Briefly what it does:

- Run a WAN network with Enterprise-grade WAN-like capabilities using a combination of private and public circuits.

- Automates VPN tunnel provisioning between sites and cloud locations (no more manual IKE templates!)

- Magically sorts out QoS for you

- Provides a singular UI where you can run CRUD/Management OPs on all your branch SD-WAN edges

- Breaks out internet locally

- Gets you to your SaaS, IaaS, and PaaS more efficiently.

- Consistency in config and security policy across many branches

I decided to use VMware SD-WAN for my purposes (and for obvious reasons if you decide to look me up). It simplifies my life and does IPSec setup (and more).

Now for the backstory….

I originally intended to have this mini-DC set up to be able to test a variety of things and, along my journey I stumbled across Kubernetes. I’m not even going to get into detail about Kubernetes in this post but for the uninitiated:

Kubernetes is an open-source application platform that provides scalability, auto-healing, life-cycle management, for applications, using declarative state.

Declarative configuration: I always want this output, K8s CLUSTER MAKE THIS HAPPEN.

For me, Kubernetes allows me to transform the way I achieve, scale, HA and DR, globally.

So, I purpose-built this lab to be able to deploy some application in Kubernetes, and spread this application between my data center, my cloud locations, and maybe a few branches. Further to this, I aim to have Kubernetes ensure that if any part of my spread-application fails, it knows how and where to rebuild it so, “I always get the output I want” using declarative state.

In future posts, I’ll break down several sections a bit further and expand on the lab topology, providing more detail. Stay tuned and thank you for reading!