Let’s talk about connectivity…

Over the last several years, we’ve seen the rise of cloud-native patterns, which map to running microservices in the form of containers. Those containers are likely to be run on a ubiquitous and widely known platform, Kubernetes — or K8S for short.

This post isn’t about K8S, it’s about how these microservices transact, and how end-users and other applications may interact as well.

Normally, we’ll have multiple interfaces to an application — the front end, and the back end are critical as they tend to serve as endpoints to our requests. And simultaneously, serve each other.

More critically, we tend to expect that when we run curl, some value or output will be returned. But who is arbitrating and responding to that request? It might quite possibly be an API Gateway, or even a Service Mesh.

What is an API?

API stands for Application Programmable Interface. That means you have an interface where you interact with parts of an application so that you can:

- Retrieve objects or data to store in some repository

- Gather metrics of subcomponents of your application to understand the health of it

- Manipulate a particular part of an application to do a certain thing

- Leverage an application to do something for your application

- Develop and provide your own custom front-end to leverage another application as a backend

When you have access to an application and smaller pieces of it, you have flexibility of leveraging the pieces you need. If you own the application, microservices are easier to change out due to their decoupled nature. API Gateways help with this decoupling.

What is an API Gateway?

An API gateway is a system that accepts incoming requests towards API resources or back-ends of an application. If you are familiar with Network Gateways, or router, the concept is similar in that, a gateway accepts a request and directs to a destination. An API gateway lives at Layer 7, which means it’s Layer 7 aware and watches for requests like HTTP. As a Gateway, it must provide policing for inbound requests, to ensure:

- Authenticated and secure requests

- Requests are routed appropriately

- Requests are limited to maintain service availability (in scale situations)

- Proxying

- Monitoring and health

What is a Service Mesh?

A Service Mesh is a system meant to provide service endpoints or microservices, a secure way to communicate with each other. It is also a system to control Ingress and Load-balancing towards different parts of an application. In this approach, a data-plane is distributed towards and put alongside the service endpoint. The data plane is then directed and managed by a control plane to enforce a variety of policies which are used for:

Traffic management

- Let’s route traffic towards its intended destination

- Let’s route traffic to an alternative destination that provides the same output and results

- Let’s circuit break in the event of increase latency and bypass a service

- We can distribute workloads to different environments and still reach them

- Let’s proceed to deploy different versions of our application and get feedback (Canaries, Blue-Green Deployments)

Security

- Let’s encrypt the communication for trusted endpoints with mTLS

- Let’s block unnecessary or illegitimate requests

Observability

- Who’s who (Service Discovery)

- What can we see?

- When we see something going wrong, what automated steps are taken to resolve?

- Can we trace through endpoints to see where things went wrong?

With Kubernetes, we follow a side-car pattern in which, a secondary side-car container is deployed, which is the data plane of the Service Mesh. The primary application container of that pod, will send its traffic through the Service Mesh Data Plane container, which is controlled by Service Mesh Control Plane pods/containers usually living in the same cluster in a different namespace.

Service Mesh can extend to direct Load balancers and DNS such that applications can be spreads across multiple providers.

I must also make mention of LETS, which stands for Latency, Errors, Traffic, and Saturation, which Service Mesh aims to observe and act upon.

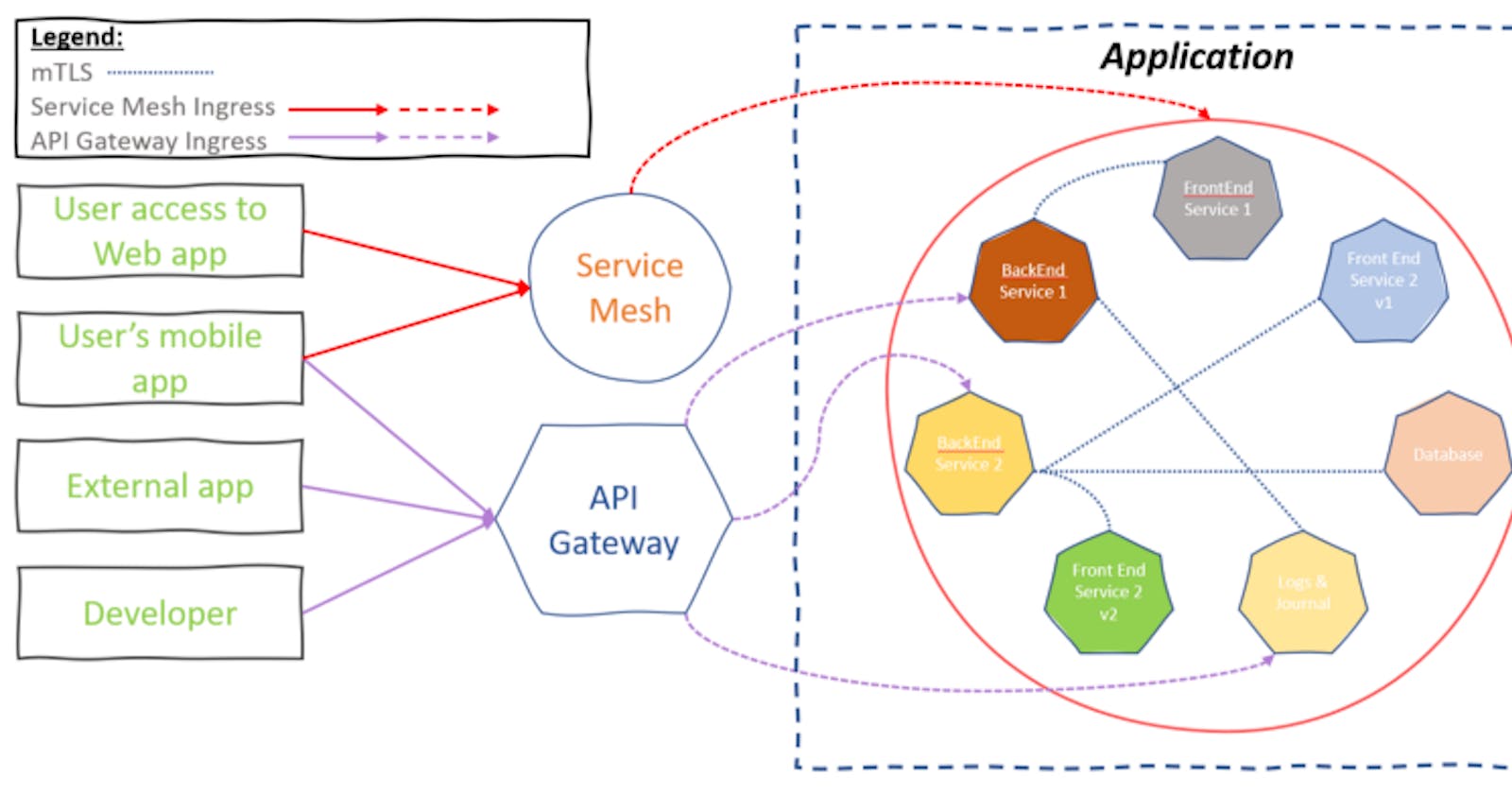

What does it look like?

This diagram’s intention is to illustrate the various flows of traffic at a high-level, between requestor, API Gateway and/or Service Mesh, and the microservice. There’s significantly more to this story but, we can save that for another time.

Who provides these capabilities?

There are several providers out there; here is a list of some.

How are they different?

API Gateways act as a system that need to establish whether an inbound request is legitimate and directed towards the intended destination. Using an API gateways exposes endpoints of an application for other parts of other applications to use. Service Mesh on the other hand focuses more on establishing, securing, and monitoring point-to-point communication, despite offering a point of ingress. It almost sounds like a VPN.

How are they similar?

Both are similar in that they control the flow of traffic towards their intended destination. They both can identify what that flow of traffic might be, in addition to what’s contained inside that flow. From these details, an API Gateway, and Service Mesh, can decipher what could be going on. Most importantly, both operate at Layer 7.

Can both be used collaboratively?

Yes, and while there may be some overlap, the intention of each is to control and manipulate traffic from the point of view of the request being made. In the future, I suspect you’ll start to see combined offerings where an API Management and Gateway is combined with a Service Mesh offering to capture North-South and East-West traffic flows and patterns. And this may be to avoid complete confusion and clash of “swim lanes”, while providing an all-in-one experience.

What are we aiming to solve?

We are aiming to solve the problem of connectivity between service endpoints while securing the entry point to APIs of our applications. This allows us to better decouple microservices and provide better lifecycle operations, and continually iterate on the application with new code and features. Both technologies can prevent consumers from noticing any outages, downtime, or availability concerns.

A great example of this is Uber or UberEats, both make use of the Google Maps API, but will do so via an API gateway, primarily because we’d want to “rate-limit” and not over-tax the Maps API.

An example of leveraging Service Mesh is where a client is considering moving containerized workloads from one cloud provider to another. Migration is a trick and is handled by three things:

- A new deployment of the same application

- Load balancer directing traffic (direction from Service mesh)

- DNS

By utilizing the three with Service Mesh, applications can be migrated seemlessly. Also, because Kubernetes doesn’t care. This same approach can be leverage for Canary deployments of a new version of an application, where a portion of traffic requests get directed to that new version while most requests go to the old. The same would apply to Blue-Green deployments. The same would apply for high-availability and disaster recovery of these containerized workloads. Perhaps, we are migrating away from deploying an application to a set of VMs toward, and Service Mesh can facilitate that for us.

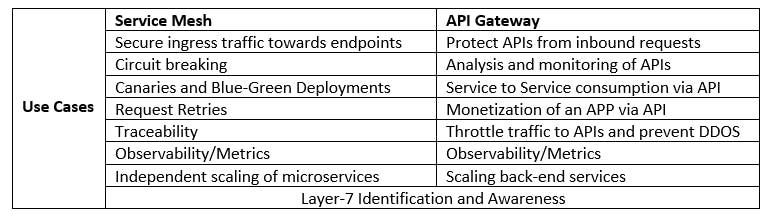

Let’s review the use-cases

How can I get started?

For API Gateway:

- SpringCloud Gateway — https://spring.io/projects/spring-cloud-gateway

- Kong API Gateway — https://docs.konghq.com/gateway-oss/2.5.x/getting-started/quickstart/

- Tyk API Gateway — https://tyk.io/sign-up/

For Service Mesh

- Istio — https://istio.io/latest/docs/setup/getting-started/

- Linkerd — https://linkerd.io/2.10/getting-started/

- Kuma — https://konghq.com/blog/getting-started-kuma-service-mesh/

It’s also worth checking out the CNCF Landscape as there are other API Gateway and Service Mesh offerings.

Looking for an enterprise-grade offering? Why not check out VMware Tanzu Service Mesh? One of my peers, Niran, wrote a great blog post around the extensibility provided with TSM through the usage of Global Namespaces which provides better controls and mechanism to distribute your applications in a multi-cloud world! Check it out here.

Final thoughts

While API Gateways and Service Meshes have existed for some time, I am noticing a much large uptick of these technologies. There are a variety of reasons but the most common are:

- Leveraging microservice patterns

- Securing microservices

- Scaling them

- Observing them

- Interacting with them

- Manipulating them

- Modifying them without any impact to a requestor

- Distributing them

In the near and not-so-distant future, we may see a consolidation of the two through enterprise offerings. We may even see how Service Mesh secures user endpoints which may redefine the way we approach VPN solutions and end-user connectivity. In future posts I’ll dive deeper into the capabilities of API Gateways and Service Meshes.

Thank you for reading!